Inference

Here are provided the minimal commands you have to run in order to run the inference of CosyPose. You need to set up the environment variable $HAPPYPOSE_DATA_DIR as explained in the README.

1. Download pre-trained pose estimation models

python -m happypose.toolbox.utils.download --megapose_models

2. Download the example

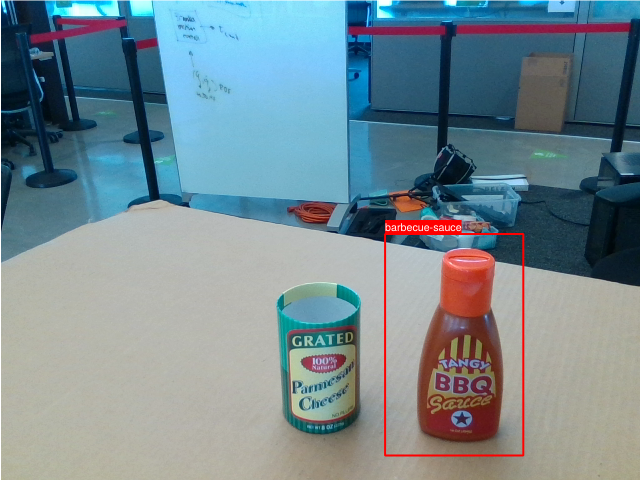

We estimate the pose for a barbecue sauce bottle (from the HOPE dataset, not used during training of MegaPose).

python -m happypose.toolbox.utils.download --examples barbecue-sauce

The input files are the following:

$HAPPYPOSE_DATA_DIR/examples/barbecue-sauce/

image_rgb.png

image_depth.png

camera_data.json

inputs/object_data.json

meshes/hope-obj_000002.ply

meshes/hope-obj_000002.png

-

image_rgb.pngis a RGB image of the scene. We recommend using a 4:3 aspect ratio. -

image_depth.png(optional) contains depth measurements, with values inmm. You can leave out this file if you don't have depth measurements. -

camera_data.jsoncontains the 3x3 camera intrinsic matrixKand the cameraresolutionin[h,w]format.{"K": [[605.9547119140625, 0.0, 319.029052734375], [0.0, 605.006591796875, 249.67617797851562], [0.0, 0.0, 1.0]], "resolution": [480, 640]} -

inputs/object_data.jsoncontains a list of object detections. For each detection, the 2D bounding box in the image (in[xmin, ymin, xmax, ymax]format), and the label of the object are provided. In this example, there is a single object detection. The bounding box is only used for computing an initial depth estimate of the object which is then refined by our approach. The bounding box does not need to be extremly precise (see below).[{"label": "hope-obj_000002", "bbox_modal": [384, 234, 522, 455]}] -

meshesis a directory containing the object's mesh. Mesh units are expected to be in millimeters. In this example, we use a mesh in.plyformat. The code also supports.objmeshes but you will have to make sure that the objects are rendered correctly with our renderer.

You can visualize input detections using :

python -m happypose.pose_estimators.megapose.scripts.run_inference_on_example barbecue-sauce --vis-detections

3. Run pose estimation and visualize results

Run inference with the following command:

python -m happypose.pose_estimators.megapose.scripts.run_inference_on_example barbecue-sauce --run-inference --vis-poses

by default, the model only uses the RGB input. You can use of our RGB-D megapose models using the --model argument. Please see our Model Zoo for all models available.

The previous command will generate the following file:

$HAPPYPOSE_DATA_DIR/examples/barbecue-sauce/

outputs/object_data_inf.json

A default object_data.json is provided if you prefer not to run the model.

This file contains a list of objects with their estimated poses . For each object, the estimated pose is noted TWO (the world coordinate frame correspond to the camera frame). It is composed of a quaternion and the 3D translation:

[{"label": "barbecue-sauce", "TWO": [[0.5453961536730983, 0.6226545207599095, -0.43295293693197473, 0.35692612413663855], [0.10723329335451126, 0.07313819974660873, 0.45735278725624084]]}]

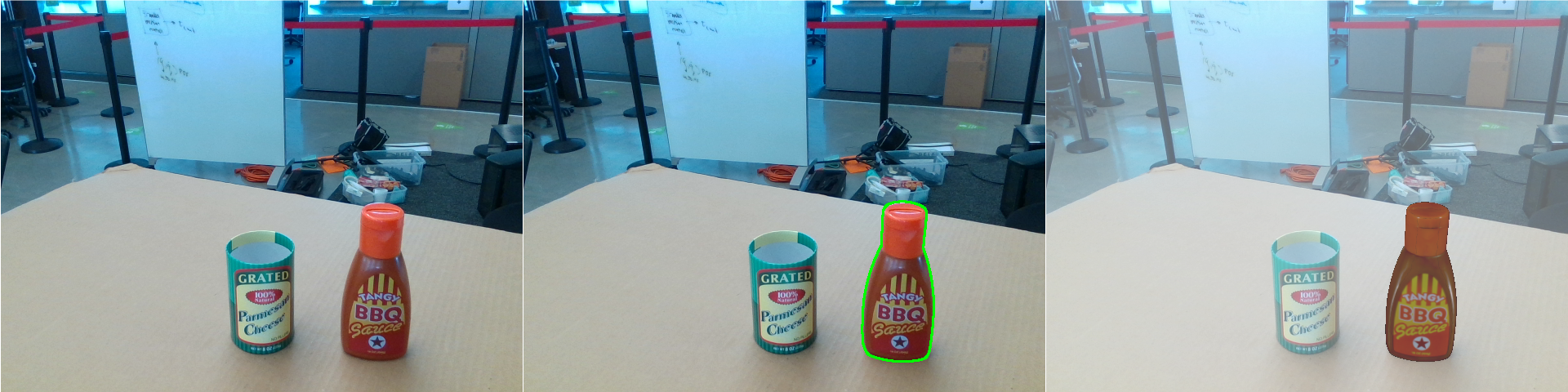

The --vis-poses options write several visualization files:

$HAPPYPOSE_DATA_DIR/examples/barbecue-sauce/

visualizations/contour_overlay.png

visualizations/mesh_overlay.png

visualizations/all_results.png